Nvidia unveils the H200 chip which is a game-changer for AI computing

NVIDIA recently upgraded the capabilities of its excellent AI computing platform with the release of the NVIDIA HGX H200. Imagine this: it uses the NVIDIA H200 Tensor Core GPU to its full potential, demonstrating its superior memory capabilities to manage large amounts of data for high-performance computing and generative AI applications. With the introduction of HBM3e, a more capacious and supercharged memory, the H200 accelerates scientific computing for HPC workloads, large language models (LLMs), and generative AI.

The H200 offers a staggering 141GB of memory at 4.8 terabytes per second, nearly doubling the capacity and offering 2.4 times the bandwidth of its predecessor, the NVIDIA H100, all thanks to HBM3e.

All set for the fantastic news? The H200 is slated to launch in the second quarter of 2024, with an extremely remarkable performance jump promised. Imagine this: Compared to its predecessor, the H100, it is nearly double the inference speed of Llama 2, a large 70 billion-parameter LLM. You won’t want to miss that leap! So, put a significant update on your schedule because the tech world is going to get much better. It’s a performance powerhouse that will soon be available on the market, not simply a system!

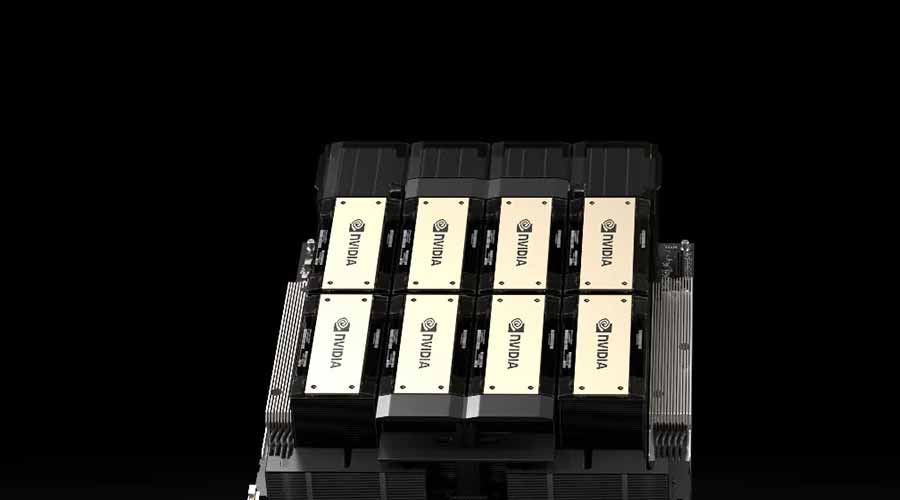

The exciting thing is that the NVIDIA H200 is available on server boards with four- and eight-way configurations for the NVIDIA HGX H200. It is not limited to single units. These setups work well with HGX H100 systems’ hardware and software. Additionally, it is on display with the August launch of the NVIDIA GH200 Grace Hopper Superchip with HBM3e.

The H200 may be deployed with these choices in on-premises, cloud, hybrid-cloud, and edge data center configurations. And a special thanks to NVIDIA’s group of international partner server manufacturers, which includes well-known brands like ASUS, Lenovo, Dell Technologies, and more. With the H200, they may turbocharge their current systems.

Let’s speak about power now. With an impressive 1.1TB of aggregate high-bandwidth memory and over 32 petaflops of FP8 deep learning computing, an eight-way HGX H200 is a formidable machine. Finally, for the showpiece, combine the H200 with the elegant NVIDIA Grace CPUs and the lightning-fast NVLink-C2C interface, and you get the GH200 Grace Hopper Superchip with HBM3e. It’s not simply a chip; it has the potential to be a superstar.